Apple Vision Pro, with 7 years of ARKit

Hi frens,

I’ve been preparing a talk for ARKit, reviewing what Apple has done for the past 7 years.

This year in WWDC23, there’s no longer ARKit 7, but a new term “special computing” —— For the brand new Apple Vision Pro.

So what’s in the box for “spatial computing” and 7 years of ARKit?

Here’s my tweet thread. Slides included. Like the tweet if it helps!

How to develop for Apple Vision Pro?

To understand the foundation of Apple’s spatial computing, I gave a talk to summarize 7 years of ARKit, here’s the slides:

Made with @iAPresenter and This by @Tinrocket

—

Is AR only about placing objects, or face effects?

No. AR is about understanding environment, with sensors.

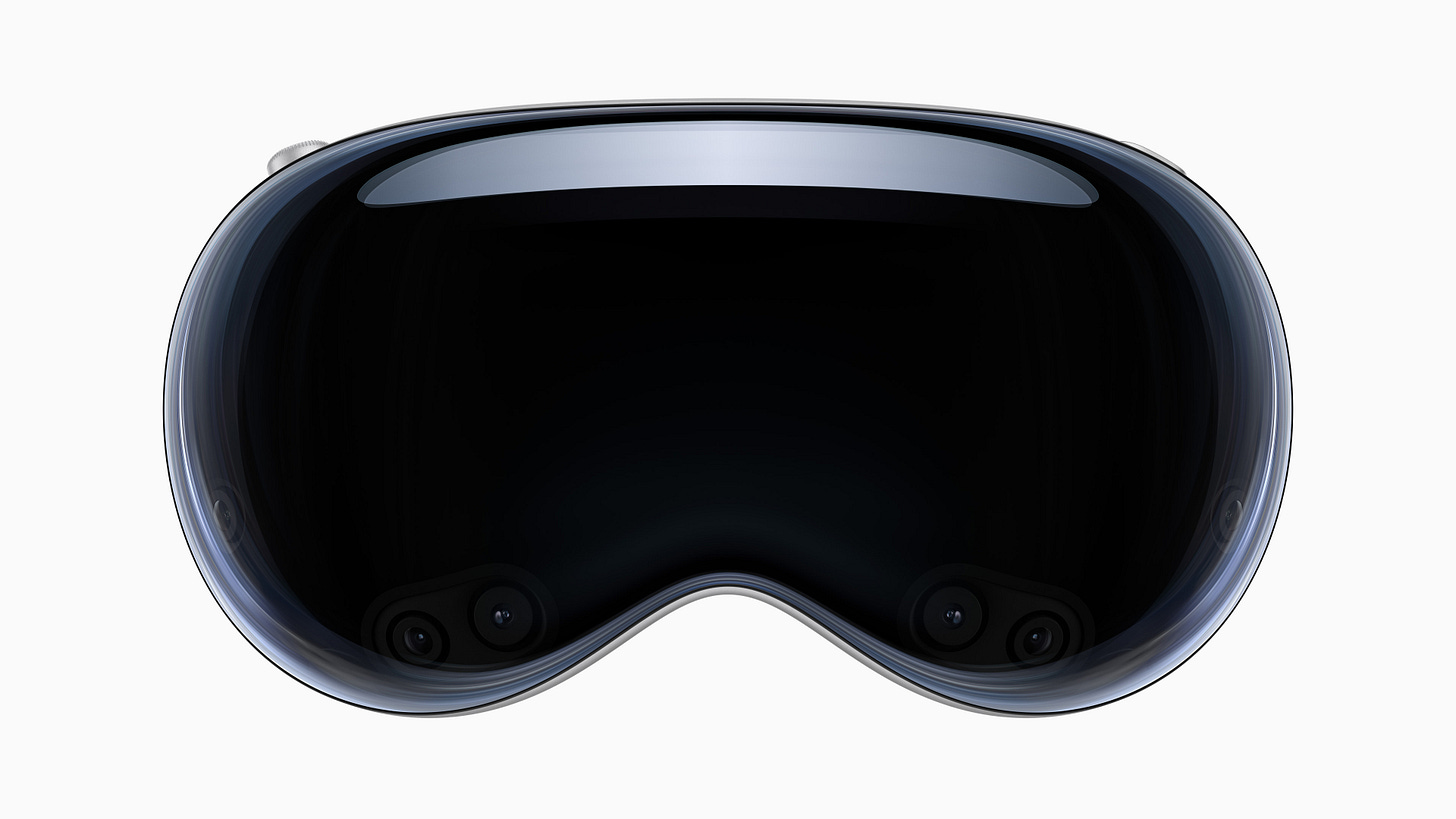

What makes Apple’s product unique in the AR space? It’s all about the sensors.

(Starting at 1:50:12 on WWDC 2023 Keynote)

—

Apple Vision Pro is the ultimate product packed with all sensors.

All the magical technologies have been developed in ARKit, e.g. persistent AR, shared experiences, object detection, motion capture, RoomPlan, etc.

Apple has done it again, through a tight integration of hardware and software.

—

Currently, "the best way to use apps on visionOS is used in indoor environments." from Reinhard K (Apple) on WWDC 2023 Slack.

Guess what? Apple has been also developing "Location Anchor" in a number of cities, which paves the future of outdoor AR experiences.

The future is in your hands. Excited?

—

Lastly, here are the best WWDC session videos to start with spatial computing:

3D user interface

Some people argued: Apple Vision Pro is just Apple’s first TV.

I honestly agree with that. A TV in depth.

Apple has been trying UI in 3D for years. At the first few versions of iOS 7, there was a cool “parallax effect” to make the whole user interface look like 3D. Andy Matuschak, an ex-Apple employee reflected on that.

Apple TV brought it back, with focus system, and later used in more places, including Apple Vision Pro.

3D user interaction

With introduction of iPhone 6s and Apple Watch, there was “Force Touch” (later renamed as “3D Touch”) trying to bring user interaction into 3D.

It doesn’t works instinctively, and I don’t like it. You never know if an UI element supporting 3D Touch before trying. Yikes.

Furthermore, 3D Touch was just like another “long press”.

But there’s something fundamentally unique in Apple Vision Pro. Controlling with just eyes, hands, and voice.

It’s just like the original iPhone moment, all over again.

In 2007: Why did other smartphones at the time, came with a hardware keyboard or a stylus?

In 2023: Why do other VR headsets require users to hold controllers?

Inputting on mobile phones was indeed a hard problem. That was why Apple executive, Scott Forstall, froze all iPhone development, to focus on software keyboard for a few weeks. The person responsible for software keyboard, Ken Kocienda, even wrote a book “Creative Selection” about the history.

Inputting on VR/AR devices has been hard, too, until Apple Vision Pro. Besides intuitive eye tracking and hand gestures, there will be direct touch in the air, too! It’s like a sci-fi movie coming true, again!

Will Apple Vision Pro become the magical device (except for the magic price $3,499 😉)? We’ll see in next year.

Your friend,

Denken